In the fast-paced world of social media, where a single post from an influential figure can spark widespread debate, pausing to fact-check is more important than ever. On August 19, 2025, Paytm founder Vijay Shekhar Sharma posted on X (formerly Twitter):

“If you are part of a WhatsApp group. Today onwards WhatsApp is allowing AI to read chats. ‼️‼️ So enable this setting to block it.”

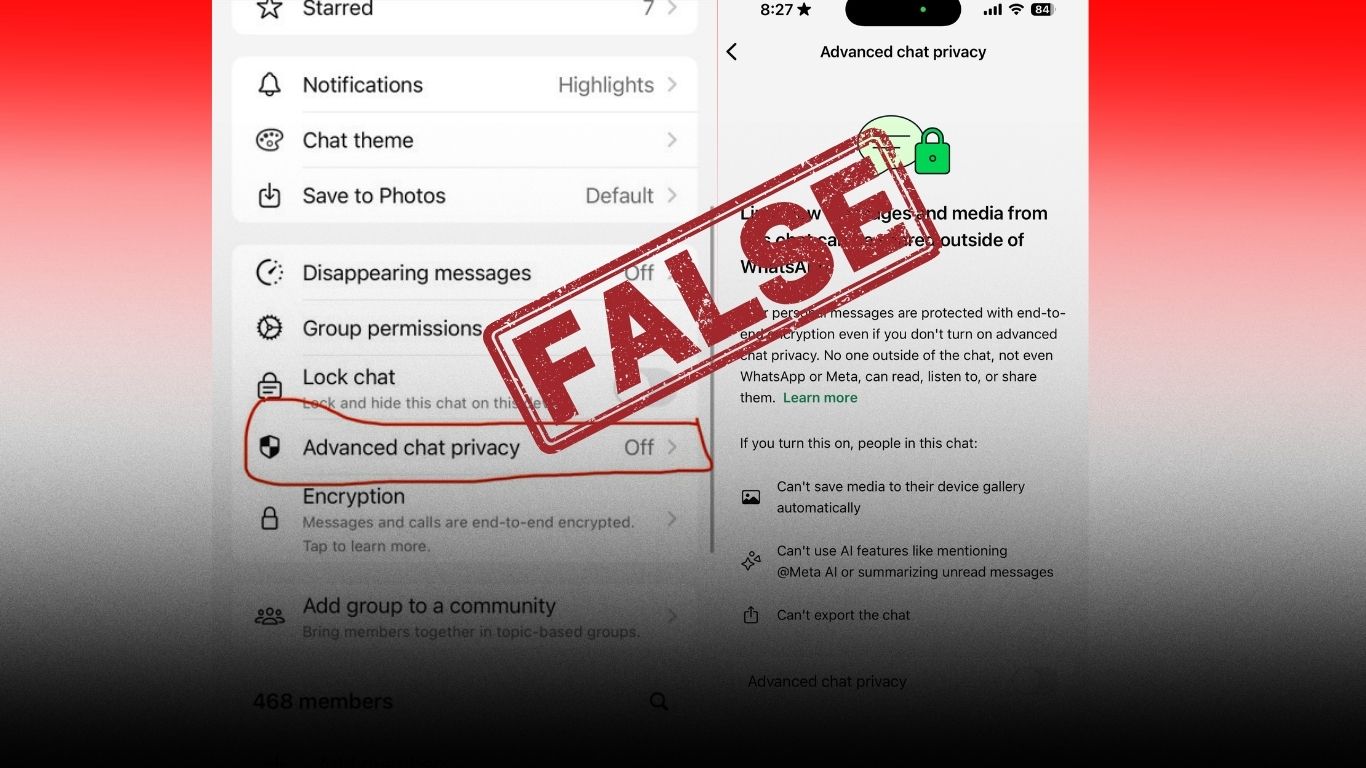

He attached screenshots pointing users to the Advanced Chat Privacy setting. The message quickly went viral, gathering thousands of reactions. For many, Sharma’s stature as a respected entrepreneur in India’s fintech ecosystem gave the claim immediate weight. With WhatsApp deeply embedded in daily life for communication, payments, and business, the idea that AI could be scanning private chats was bound to spark concern.

But a closer look reveals a different story. Sharma’s post, though perhaps motivated by caution, was inaccurate and misleading. WhatsApp’s integration of Meta AI does not mean AI is automatically combing through conversations. To understand why the claim misrepresents reality, we need to unpack what Meta AI on WhatsApp actually does, how privacy safeguards are structured, and why such fears resonate with users in the first place.

Sharma implied that WhatsApp had quietly enabled Meta AI to scan chats starting August 19, unless users took action by enabling Advanced Chat Privacy. His phrasing—”today onwards”—gave the impression of an immediate shift in how WhatsApp handles data.

The claim connected with existing anxieties about AI integration. Earlier in 2025, similar rumors had circulated online, triggered by the introduction of features like AI-generated summaries and the presence of a permanent Meta AI button in the app. By framing the update as a default intrusion, Sharma’s post played into the belief that AI had crossed another line into user privacy.

The Facts: What Meta AI Can—and Cannot—Do

WhatsApp has repeatedly clarified that Meta AI does not have blanket access to chats. The company’s official position is straightforward:

- End-to-end encryption remains intact. Regular personal and group messages are not accessible to WhatsApp, Meta, or its AI systems.

- AI works only when invited. Meta AI processes content only when users explicitly engage it—by tagging @Meta AI in a chat or activating an AI-powered tool.

- AI interactions are logged. Users can check the Message Info screen to confirm when Meta AI was involved in a conversation.

Why the confusion persists

Although Sharma’s claim is wrong on the specifics, it reflects real scepticism about Meta’s intentions. Several factors contribute to this distrust:

- Meta’s history with privacy. Past controversies—like the Cambridge Analytica scandal, unencrypted backups, or aggressive data collection for ad targeting—make users wary of new features, even if officially optional.

- The permanence of AI integration. The unremovable Meta AI button in WhatsApp reinforces the sense that AI is being forced into the user experience.

- Limits of encryption in AI use. While chats remain encrypted, messages sent to Meta AI are not. Meta says it uses “Private Processing” to protect prompts, but the lack of end-to-end encryption leaves room for doubt.

- Per-chat privacy controls. The Advanced Chat Privacy setting must be enabled chat by chat, rather than globally, which feels cumbersome and raises suspicions about default choices.

Against this backdrop, Sharma’s post tapped into a familiar unease: that large tech companies often expand data collection first and clarify later.

As a founder whose work has reshaped India’s fintech sector, Sharma commands trust among millions. His words can encourage users to adopt safer practices—but they can also fuel unnecessary alarm if imprecise. In an era where misinformation spreads quickly, public figures carry an added responsibility to distinguish between potential risks and verified facts.

On the other side, Meta’s reassurances should not be accepted uncritically either. Its mixed record means skepticism is healthy, and demands for greater transparency are justified. Trust in technology depends on clarity and accountability, not blind acceptance of corporate statements.